Difference between revisions of "NLP"

| (6 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | [[Category: | + | [[Category:User Interface]] |

The Natural Language Processor (NLP) is an embedded engine in HSYCO that can interpret plain text commands, like "turn on the lights in the living room", and execute the corresponding commands to change the state of data points or call user code in EVENTS, JavaScript or Java. | The Natural Language Processor (NLP) is an embedded engine in HSYCO that can interpret plain text commands, like "turn on the lights in the living room", and execute the corresponding commands to change the state of data points or call user code in EVENTS, JavaScript or Java. | ||

| Line 144: | Line 144: | ||

Log files generated by the NLP when commands are executed start with "NLP" and the name of the nlpdictionary file, followed by the text command, the data point, the command match ranking and the verb. For example: | Log files generated by the NLP when commands are executed start with "NLP" and the name of the nlpdictionary file, followed by the text command, the data point, the command match ranking and the verb. For example: | ||

| − | + | - NLP [nlpdictionary_en.txt] COMMAND: Open garage door: m.autom.14, 100, up | |

| − | For group commands, all matching data point names are listed in the log message: | + | For group commands, all matching data point names are listed in the log message: |

| − | + | - NLP [nlpdictionary_en.txt] COMMAND: Turn on the lights: m.light.91 m.light.92 | |

| − | m.light.11 m.light.55 m.light.66, 100, on | + | m.light.11 m.light.55 m.light.66, 100, on |

In the example above, all commands matching the text are tagged with a common group id, "group_lights". But we can easily introduce an ambiguity on the same text by removing "group_lights" from one or more of the matching commands, and get an ambiguity error instead: | In the example above, all commands matching the text are tagged with a common group id, "group_lights". But we can easily introduce an ambiguity on the same text by removing "group_lights" from one or more of the matching commands, and get an ambiguity error instead: | ||

| − | + | + NLP [nlpdictionary_en.txt] ERROR: command ambiguity: Turn on the lights: | |

| − | m.light.91 m.light.92 m.light.11 m.light.55 m.light.66 | + | m.light.91 m.light.92 m.light.11 m.light.55 m.light.66 |

A text that can't be matched to any command is logged as an error: | A text that can't be matched to any command is logged as an error: | ||

+ NLP [nlpdictionary_en.txt] ERROR: no match: Turn off the living room fan | + NLP [nlpdictionary_en.txt] ERROR: no match: Turn off the living room fan | ||

| Line 185: | Line 185: | ||

If you don't have an account on IFTTT you should create one now. | If you don't have an account on IFTTT you should create one now. | ||

| − | Go to "My Applets" and click "New Applet". Choose the Google Assistant service for "+this", sign in to Google and allow IFTTT to use this service, then select "Say a phrase with a text ingredient" as a trigger. | + | Go to "My Applets" and click "New Applet". Choose the Google Assistant service for "+this", sign in to Google and allow IFTTT to use this service, then select "Say a phrase with a text ingredient" as a trigger. |

| + | |||

| + | [[File:nlpifttt1.png|400px|center]] | ||

| + | |||

The trigger fires when you say "Ok Google" to the Google Assistant followed by a phrase like "Please $" or "Server $". The $ symbol specifies where you'll say the text command to the NLP. Note that some words, like "home" are reseved and can't be used in the trigger phrase. | The trigger fires when you say "Ok Google" to the Google Assistant followed by a phrase like "Please $" or "Server $". The $ symbol specifies where you'll say the text command to the NLP. Note that some words, like "home" are reseved and can't be used in the trigger phrase. | ||

| Line 193: | Line 196: | ||

Select "Webhooks" for the "that" part of the applet. | Select "Webhooks" for the "that" part of the applet. | ||

| − | The URL you specify must have the same server address and port number you use to access the HSYCO Web interface, followed by the /ifttt path and the password defined in the IFTTT I/O Server configuration, for example: https://example.hsyco.net/ifttt/ oieojdjeiw48394ejdejdd. | + | The URL you specify must have the same server address and port number you use to access the HSYCO Web interface, followed by the /ifttt path and the password defined in the IFTTT I/O Server configuration, for example: <nowiki>https://example.hsyco.net/ifttt/oieojdjeiw48394ejdejdd</nowiki>. |

The "Method" field should be set to POST, the "Content Type" field is not required, and add the "TextField" ingredient to the "Body" field. | The "Method" field should be set to POST, the "Content Type" field is not required, and add the "TextField" ingredient to the "Body" field. | ||

Latest revision as of 13:09, 8 November 2018

The Natural Language Processor (NLP) is an embedded engine in HSYCO that can interpret plain text commands, like "turn on the lights in the living room", and execute the corresponding commands to change the state of data points or call user code in EVENTS, JavaScript or Java.

The NLP accepts commands to multiple distinct items, like "turn off the lights in the kitchen, bathroom and entrance", but also group commands like "turn off all lights in the attic" and multiple distinct, concatenated commands, like "turn on the lights in the kitchen and open the door".

Natural language commands can be originated from the speech recognition feature of HSYCO's iOS and Android apps, from any text message, for example Telegram messages and SMS messages or, thanks to the integration via IFTTT, from Google Home devices and the Google Assistant service.

The NLP engine supports multiple languages and can concurrently interpret text commands in any of the languages it has been trained to understand.

Finally, the commands don't need to have a rigid format, and "turn on the lights in the kitchen" will work just as well as "turn on kitchen's lights" or even "lights kitchen on".

Contents

Training the NLP

The NLP must be trained to make it understand language commands. Training consists of a simple text file for each language where you will define the verbs, areas, text keywords and the association to data points or user code.

The NLP engine doesn't know the grammar and semantic rules of any language. It works only by pure text matching, ranking each incoming text to the defined set of commands and looking for the command that has the best overall match.

The training text files are human readable and are currently written using a plain text editor, like in the File Manager, or directly uploaded to the HSYCO main directory. As usual, when these files are modified, they are automatically reloaded and changes become effective within a few seconds.

The name of the training files is nlpdictionary_xx.txt, where xx is the standard language code (based on ISO 639-1 codes). For example, nlpdictionary_en.txt would be the training file for commands in English, and nlpdictionary_de.txt for German.

The nlpdictionary file format

The nlpdictionary_xx.txt files are comprised of four sections, verbs, areas, keywords and commands. The areas section is optional, it can be defined without content or skipped, if not used in the command section, while all other sections are required and must contain their respective items.

This is an example of a simple dictionary file:

# A simple nlpdictionary_en.txt file (verbs) on : on; turn on off : off; turn off up : open; up down : close; down set : set; dim (areas) outside : garden; outside; external; exterior firstfloor : first; first floor attic : attic; second; second floor (keywords) bath : bathroom; bathrooms bedroom : bedroom; bed room; bedrooms; bed rooms door : door; doors entrance : entrance garage : garage light : light; lights kitchen : kitchen counter : counter sofa : sofa; sofas (commands) m.autom.14 : outside; garage door; ; up down m.light.11 : outside; entrance light; group_lights; on off m.light.55 : firstfloor; kitchen light; group_1 group_lights; on off m.light.66 : firstfloor; kitchen counter light; group_1 group_lights; on off m.light.91 : attic; bath light; group_lights; on off m.light.92 : attic; bedroom sofa light; group_lights; on off up down set

Blank lines and leading or trailing spaces are ignored. The '#' character is reserved and denotes a comment. Text following '#' is ignored until the end of line is reached.

All text is converted to lower case, so capitalization is ignored in the file format and for matching purposes.

Auto-completion

The auto-completion feature allows you to initially create a file containing the commands section only. Based on the areas, verbs and general keywords found in commands, the NLP engine will automatically add the missing sections and keywords. You will then simply add the aliases to each keyword. The auto-completion feature will also automatically add any missing keywords to the appropriate section whenever it finds a command with undefined keywords.

The (verbs), (areas) and (keywords) sections

The (verbs), (areas) and (keywords) sections have the same format, that is one or more lines starting with a unique id, followed ":" and a semicolon separated list of text keywords. Each text keyword is the exact text format that the NLP will search in the natural language text commands. It is usually important to define multiple "alias" text for the same keyword id, to properly support different words with the same meaning and also to accept some typical variations in the text returned by the various speech recognition systems. For example, defining the "bedroom" keyword with alternative text aliases, like "bed room" may improve matching. It is also important, in most cases, to add the plural version of the word:

bedroom : bedroom; bed room; bedrooms; bed rooms

All punctuation characters in the keyword text are replaced with a single space. Also, multiple consecutive spaces are not significant, and are converted to a single space.

You should avoid conflicts or ambiguities in the definition of all keywords:

- All id keys must be unique within each section;

- Never declare the same text for different keywords;

- Avoid ambiguities between words defining verbs and words defined in areas or general keywords. Compound words like, for example, "open space" are tricky, beacuse "open" is also very likely defined as a verb, and should be avoided.

The (commands) section

The (commands) section lists all possible commands and their association to I/O command data points or to user code.

The general format is:

<I/O data point>| <user command> : <area id>; <keywords>; <groups>; <verbs>

All four fields after the colon are required. The area id and groups are optional and can be omitted, but the ";" separators must be retained. For example, this is a valid command line:

m.autom.14 : ; garage door; ; up down

While this is an error:

m.autom.14 : garage door; up down

To the left of the colon character you can specify an I/O data point or a user command.

The I/O data point must be writable and accept the verb id as a value. "on", "off", "up", "down" are typical verb ids passed as values to the I/O data points but, in general, the I/O data point matching a command will simply be written with the matching verb id as value, whatever that is.

There are two exceptions to this.

For data points associated to dimmer lights, when a data point represents a dimmer, the up and down verbs will also be accepted and automatically converted to a level, increasing or decreasing the current level by 20%.

The second exception is the verb id "set". When this verb is used in a command, the NLP will search the input command for a single integer number, optionally followed by a "%" character. If found, this value will be passed to the data point. If more than one number is found, the command will fail to match.

An NLP command can also be associated to a user call, so that the NLP can trigger any EVENTS, JavaScript or Java code on a matching command. To do this, simply use the standard user command notation "user <name>=<param> to the left of the colon character. For example, adding the following line to the nlpdictionay example:

user gates=door : outside; entrance door; ; up

Will match a natural language text like "open the entrance door" and trigger a user event with "gates" as name and "door" as parameter.

You can pass the whole matching natural language text as parameter to a user event, writing "*" as parameter, after the "=" character. For example:

user nlanguagescene=* : ; scene; ; run

This allows you to interpret the matching text in your application code.

In combination with the "set" special verb id, you can also write "?" as parameter, after the "=" character. This will pass the number found in the command text. If no number if found, the NLP will not trigger the user event. For example:

user audio_level=? : ; stereo volume; ; set

Finally, a special format of the user command is the default user event, where all text that doesn't match any of the defined commands is sent as parameter to a user event. The default user event is defined with a single "*" character after the colon, for example:

user nlanguage=* : *

Each command data point or user command is associated to areas, general keywords and verbs. The area id is not required, and you can write a whole nlpdictionary without using areas if you wish. You can only define one area for a command.

The keywords field is a space separated list of generic keyword ids (i.e. those listed under the keywords section). You need to define at least one keyword for each command.

The groups field will be discussed later, as it deals with matching of ambiguous commands.

The verbs field is a space separated list of verb keyword ids (listed under the verbs section). You need to define at least one verb for each command.

Matching commands

When a text is passed to the NLP, the first step is to detect the text language. This is done by counting the number of keywords in the text that are found in each nlpdictionary file. If there is only one nlpdictionary defined, language detection is obviously skipped.

The NLP looks for the best match between the incoming text and all commands defined in nlpdictionary. This is done by ranking each command defined in nlpdictionary based on the number of keywords that are found in the incoming text. The command with the highest rank is then executed.

Resolving ambiguities

If the ranking process results in two or more commands having the exact same top ranking, a potential ambiguity condition is raised and, by default, ambiguous commands are not executed.

But in some cases you may want to issue an ambiguous command to a consistent group of data points, for example all lights in a specific area of a house. Looking at our initial example, a text command like "turn off first floor lights" should in fact turn off m.light.55 and m.light.66 rather than being rejected as ambiguous.

In order to do this, simply define one or more group ids for each command. In the commands section line syntax, the groups field is the one between the keywords and verbs fields:

<I/O data point>| <user command> : <area id>; <keywords>; <groups>; <verbs>

The groups field is a space separated list of group ids. You can associate more than one group id to a command. Group ids are simply text keywords, and don't need to de pre- defined.

In our example, both m.light.55 and m.light.66 are tagged to belong to two groups, "group_1" and "group_lights", while m.autom.14 is not associated to any group:

m.autom.14 : outside; garage door; ; up down m.light.55 : firstfloor; kitchen light; group_1 group_lights; on off m.light.66 : firstfloor; kitchen counter light; group_1 group_lights; on off

If all commands having the same top-level ranking belong to the same group, then they are all exectuted, otherwise an ambiguity condition is raised and no command is executed.

The command "turn off first floor lights" will be executed, sending the "off" value to both m.light.55 and m.light.66, because they both are tagged to belong to "group_1".

Processing multiple commands

Besides group commands, the NLP also accepts commands to multiple distinct items, like "turn off the lights in the kitchen, bathroom and entrance", as well as multiple distinct, concatenated commands, like "turn on the lights in the kitchen and open the door".

In order to identify concatenated commands, after the incoming text is associated to a language specific nlpdictionary file, the text is scanned to identify all verbs keywords. If more than one verb keyword is found, the text command is split into two or more distinct commands, that are processed individually.

Note that the verbs positions must be consistent across the concatenated commands. For example, a text like "turn on the lights in the bathroom and open the door" will be correctly split into "turn on the lights in the bathroom and" and "open the door", because both commands begin with a verb. Also, "bathroom lights on and kitchen lights off" will work fine, being split into "bathroom lights on" and "and kitchen lights off", where both command have the verb at the end. But something like "bathroom lights on and turn off the kitchen lights" will not be interpreted correctly.

The interpretation of a text containing a single command (verb) to multiple distinct items is based on a recursive subtraction approach. The text is first analyzed as a whole and, when the top-level ranking command is identified, it is executed. After this, the text is reprocessed subtracting the keywords that have specifically matched the first command. If the resulting top-level match is consistent with the original ranking, then that command is also executed, recursively.

The log messages

The HSYCO log file can be quite useful to analyze how the NLP processes the submitted text, particularly with complex commands, and to fine tune the nlpdictionary training files.

Log files generated by the NLP when commands are executed start with "NLP" and the name of the nlpdictionary file, followed by the text command, the data point, the command match ranking and the verb. For example:

- NLP [nlpdictionary_en.txt] COMMAND: Open garage door: m.autom.14, 100, up

For group commands, all matching data point names are listed in the log message:

- NLP [nlpdictionary_en.txt] COMMAND: Turn on the lights: m.light.91 m.light.92 m.light.11 m.light.55 m.light.66, 100, on

In the example above, all commands matching the text are tagged with a common group id, "group_lights". But we can easily introduce an ambiguity on the same text by removing "group_lights" from one or more of the matching commands, and get an ambiguity error instead:

+ NLP [nlpdictionary_en.txt] ERROR: command ambiguity: Turn on the lights: m.light.91 m.light.92 m.light.11 m.light.55 m.light.66

A text that can't be matched to any command is logged as an error:

+ NLP [nlpdictionary_en.txt] ERROR: no match: Turn off the living room fan

As discussed, the interpretation of a text containing a single command to multiple distinct items is based on a recursive subtraction approach. The command "Turn on the lights in the kitchen and bathroom" is an example. The bathroom keyword is matched first, and the command is executed, but then it is also reprocessed withouth the bathroom keyword, sending the on command to m.light.55 and m.light.66, because they both match "kitchen" and "lights", and these commands are tagged with a common group id:

- NLP [nlpdictionary_en.txt] COMMAND: Turn on the lights in the kitchen and bathroom: m.light.91, 66, on - NLP [nlpdictionary_en.txt] COMMAND(R): turn on the lights in the kitchen and: m.light.55 m.light.66, 99, on

The second command, that is executed recursively, is marked as "COMMAND(R)" in the log.

How to send text messages to the NLP engine

Both the iOS and Android versions of the HSYCO App natively support the NLP for speech commands. On iOS the Apple Watch is also supported. Once speech recognition is enabled in the app, the integration with the NLP is fully automatic, and requires no further configuration on the HSYCO server.

There is also a very simple general-purpose API to send a text to the NLP for interpretation using EVENTS, JavaScript or Java. The text can be originated by any source. For example, you could decide to send any text message received via the Telegram or GSM I/O Servers to the NLP using a simple one line EVENTS, like:

IO telegram.message.777123456 : NLP = telegram.message.777123456 IO gsm.sms.323239223222 : NLP = gsm.sms.323239223222

Another very useful source of natural language commands is the If This Than That (IFTTT) I/O Server. IFTTT allows, among other things, the integration of Google Assistant, to use spoken messags with Google Home devices to control HSYCO.

How to connect Google Home and Assistant to the NLP

Google Home and Google Home Mini speakers enable users to speak voice commands to interact with services through Google's intelligent personal assistant called Google Assistant.

Using the Google Assistant service on IFTTT you can create an IFTTT applet that, using the Webhooks service, will send an HTTPS encrypted request to the IFTTT I/O Server in HSYCO whenever a spoken command is preceded by one or more keywords you choose.

Google Home configuration

Your Google Home device doesn't need any special configuration to work with HSYCO and the NLP.

On the Google account that will be associated with the Google Home speaker you should enable "Web & App Activity" and "Voice & Audio Activity" in the "Activity controls" settings (this is under "Personal info & privacy", then "Manage your Google activity" in the Google account settings page). The URL is: https://myaccount.google.com/activitycontrols

Once you have completed the setup of your Google account, you should download the Google Home app and proceed to the Google Home speaker standard configuration.

Before moving forward, check that Google Home is working, asking questions like "what's the temperature in London?".

IFTTT configuration

If you don't have an account on IFTTT you should create one now.

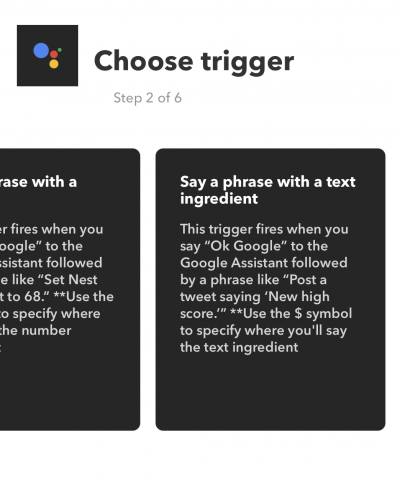

Go to "My Applets" and click "New Applet". Choose the Google Assistant service for "+this", sign in to Google and allow IFTTT to use this service, then select "Say a phrase with a text ingredient" as a trigger.

The trigger fires when you say "Ok Google" to the Google Assistant followed by a phrase like "Please $" or "Server $". The $ symbol specifies where you'll say the text command to the NLP. Note that some words, like "home" are reseved and can't be used in the trigger phrase.

Enter what you want the Assistant to say in response, and the language. Although the NLP can simulateusly undestand multiple languages, Google Assistant is limited to one language only.

Select "Webhooks" for the "that" part of the applet.

The URL you specify must have the same server address and port number you use to access the HSYCO Web interface, followed by the /ifttt path and the password defined in the IFTTT I/O Server configuration, for example: https://example.hsyco.net/ifttt/oieojdjeiw48394ejdejdd.

The "Method" field should be set to POST, the "Content Type" field is not required, and add the "TextField" ingredient to the "Body" field.

You may also want to disable "Receive a notification when this applets runs”.

IFTTT I/O Server configuration

The IFTTT I/O Server configuration is very simple. You only need to create a new IFTTT I/ O server and set the same password of the IFTTT Webhooks URL.

Note that this password must be kept secret and should be long and difficult to guess, as it could otherwise be used to easily feed unauthorized commands to the NLP.

Once Google Home is configured and IFTTT is also configured to connect the Google Assistant service to HSYCO, the following code in EVENTS will send all requests received from IFTTT to the NLP:

io ifttt.request : nlp = io ifttt.request